ISEF Symposia: Deploying artificial intelligence responsibly

Regeneron ISEF is the world’s largest precollegiate STEM competition—but the fun isn’t just in seeing who takes home the top awards. In addition to presenting their research and meeting with judges, this year’s finalists took part in a wide range of events and activities. From the always memorable Pin Exchange, to Panel Discussions with leaders across STEM fields, to an excursion to the renowned Georgia Aquarium, this year’s finalists navigated some jam-packed itineraries.

One highlight of the fair’s annual programming are symposia sessions that finalists (along with their parents, chaperones and fair directors) are invited to attend throughout the week. Covering a wide variety of topics—from college admissions overviews, to welcoming finalists to the Society’s Alumni Community, to a seminar on supercomputers—there were over two dozen sessions offering useful how-tos, as well as conversations with experts in diverse fields.

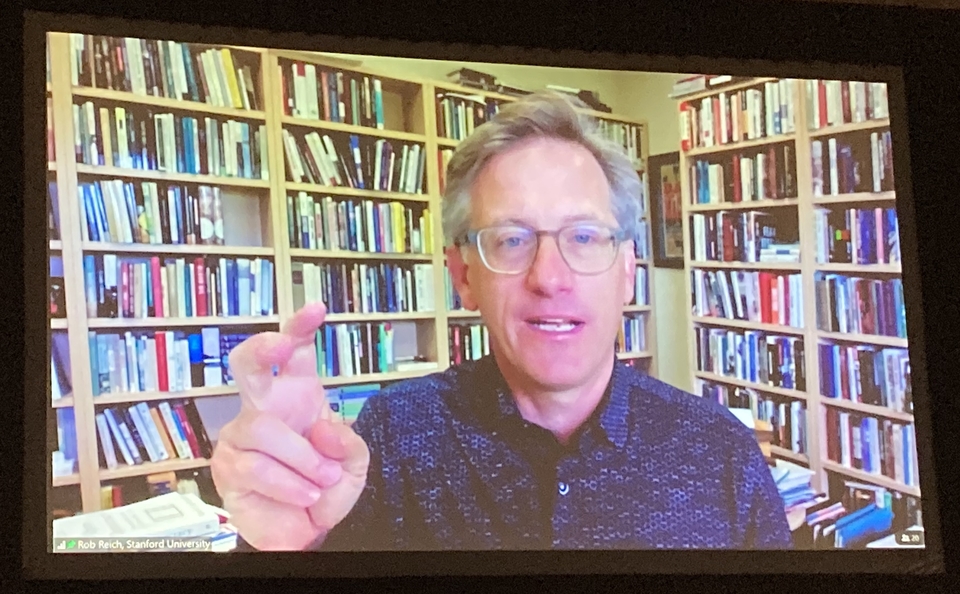

One engaging symposium titled, “Before You Build: Putting Public Interest at the Heart of Science and Technology,” was facilitated by Stanford University Professor of Political Science, Rob Reich, who is also the coauthor of the book, System Error: Where Big Tech Went Wrong and How We Can Reboot. The session explored some of the ethical and regulatory questions that are necessitated by the development of cutting-edge technologies that, for better or worse, are already shaping many aspects of our lives.

From the gene-editing technology, CRISPR, to GPT-3, an artificial intelligence with an uncanny ability to generate language and mimic human writing, Reich considered the types of technological developments that until recently were only possible in science fiction. A central premise of Reich’s remarks was the recognition of a sober truth: The potential of these technologies to transform the world for good is often matched by their capacity to do great harm if deployed haphazardly.

In Reich’s estimation, this is a challenge facing not only scientists and inventors, but also society as a whole. As we, and particularly the next generation of innovators, including this year’s ISEF finalists, push the limits of what is technologically possible, we must also develop standards that will ensure powerful new tools are used responsibly.

After Rob gave his remarks, finalists had an opportunity to ask questions. Here is one small excerpt from the thought-provoking session:

ISEF finalist question: “There is a possibility that technology can produce video edits that falsify events. This of course can have a huge impact on the justice system. Are you suggesting that we specifically try to remove these elements from the human technological spectrum because they can have such a large impact? Or how do we find a way to mitigate those effects?”

Rob Reich: “Models like GPT-3 are showing unbelievable power in producing text that is of human-level quality. In my book System Error, there’s a paragraph in the last chapter written by GPT-3—it’s a little easter egg. It’s not easy to identify because GPT-3 is indeed so powerful.

“My attempt to answer the question is that you don’t want AI scientists alone to decide how to release a model, or to think about the applications of such a model. You want to combine an orientation of social science training and ethics training that can be present in the lab at the moment of creation. At Stanford, for example, there’s a research project called the Center for Research on Foundation Models—foundation models is what Stanford calls these large language models. I’m one of the co-directors, along with someone who’s a natural language processing expert, along with a whole host of other social scientists who are studying the ways in which these language models can reproduce stereotypes, or amplify hate speech or, even worse, potentially mobilize a disinformation campaign around COVID.

“It is absolutely essential to take a multi-disciplinary approach to thinking about this technology, and to the sociotechnical benchmarks we use to stitch together responsible norms for the conduct of AI scientists, companies and beyond. We are just discovering the very potential of this technology, but it shouldn’t be left to technologists alone to answer those questions.”

With increasing numbers of innovators and researchers developing solutions to problems using tools like machine learning and artificial intelligence, questions around how technology can be of value to humanity and best integrate into our societies have never been more important. In the face of these difficult questions, we find hope in the many ISEF participants whose passion for STEM is matched only by their drive to make the world a better place.

Thank you to Rob and to everyone else who contributed to this year’s symposia, for sharing your insights with this year’s finalists and attendees.